Understanding Black Box AI

Black Box AI refers to artificial intelligence systems shrouded in mystery, where users and interested parties cannot access the system’s inputs or operations. Comparable to an impenetrable fortress, black box AI models arrive at conclusions without offering any insights into their decision-making process. Operating with intricate networks of artificial neurons spread across tens of thousands of connections, these models become as complex as the human brain, rendering their internal mechanisms and contributing factors elusive and unknown.

In stark contrast to Black Box AI, Explainable AI presents a clear, logical approach that can be comprehended by the average person. Providing transparency into its decision-making process, Explainable AI stands as the antithesis of the enigmatic Black Box AI.

How does black box machine learning work?

In the realm of artificial intelligence, deep learning modelling stands at the forefront of innovation, revolutionising various industries. However, the methodology behind these advanced models often resides in the mysterious territory known as “black box development.” Delving into the heart of this enigmatic approach, we shed light on the inner workings of deep learning, where millions of data points converge to generate remarkable outputs, correlated with specific data features.

Embracing the Intricacies of Black Box Development: Deep learning, a subset of artificial intelligence, employs intricate neural networks to process vast volumes of data and gain insights beyond human capabilities. Central to its functioning is the concept of “black box development,” where the internal mechanisms remain hidden from direct observation. Instead, the learning algorithm tirelessly sifts through countless data points, forging connections between inputs and outputs, all without revealing its decision-making process.

Unraveling the Data Correlation: At the heart of deep learning modeling lies the ability to correlate specific data features, a pivotal factor in its remarkable success. The algorithm utilises massive datasets to identify patterns and relationships between input variables, ultimately translating them into accurate and valuable outputs. This data-driven correlation process is what enables deep learning models to excel in tasks like image recognition, natural language processing, and predictive analytics.

The Advantages and Challenges of Black Box Development: The appeal of black box development lies in its ability to handle complex problems without relying on explicit rules or human-crafted logic. This approach allows deep learning models to adapt and improve continuously as they encounter new data, making them highly flexible and versatile. However, the lack of transparency poses challenges, as stakeholders may struggle to comprehend how decisions are reached, raising concerns about accountability and bias.

Bridging the Gap with Explainable AI: While black box development empowers deep learning models, the quest for transparency has led to the emergence of Explainable AI. This exciting field focuses on enhancing model interpretability, enabling stakeholders to understand the underlying reasoning behind AI-driven decisions. As research progresses, striking a balance between black box power and explainability becomes a crucial aspect of shaping the future of AI technology.

Deep learning modeling, driven by black box development, has revolutionized the landscape of artificial intelligence. Its ability to process vast data points and correlate specific features has led to unprecedented advancements in various domains. As the AI community progresses, the pursuit of transparency through Explainable AI will play a vital role in harnessing the full potential of deep learning while ensuring accountability and ethical practices in the exciting world of AI.

What are the implications of black box AI?

Deep learning models using black box strategies have transformed the landscape of artificial intelligence, achieving remarkable feats across various industries. However, the opaqueness of these models poses significant challenges, raising concerns about AI bias, lack of transparency and accountability, inflexibility, and security vulnerabilities. We shed light on the potential issues surrounding black box AI and emphasiSe the importance of addressing these concerns for a responsible and ethical AI-driven future.

AI Bias: Uncovering the Impact of Unrecognised Prejudices AI bias is a critical issue that can seep into algorithms either due to unconscious biases of developers or undetected errors during training. The consequences of biased algorithms can be severe, leading to skewed results that may offend or discriminate against affected individuals or groups. To mitigate this risk, developers must prioritise transparency in their algorithms, adhere to AI regulations, and take accountability for the effects of their AI solutions.

Lack of Transparency and Accountability: Navigating the Complexity The complexity of black box neural networks poses a significant challenge in understanding and auditing these models, even for their creators. While achieving groundbreaking achievements, the lack of transparency can be problematic in high-stakes sectors like healthcare, banking, and criminal justice, where model decisions impact people’s lives. To ensure accountability, developers must strive to enhance transparency and establish mechanisms to hold individuals responsible for the outcomes of opaque models.

Limited Flexibility: The Constraints of Black Box AI Flexibility is a crucial aspect of AI adaptability, but black box AI models often fall short in this regard. Modifying these models to accommodate changes or handle sensitive data can be a daunting task, hindering their practicality in certain applications. Decision-makers must exercise caution when processing sensitive information using black box AI, exploring alternative solutions that offer greater flexibility without compromising privacy or accuracy.

Security Flaws: Guarding Against Threats The vulnerability of black box AI models to external attacks is a pressing concern. Threat actors may exploit flaws in the models to manipulate input data, potentially leading to incorrect or even hazardous decisions. To safeguard against such threats, developers must prioritise robust security measures, employ rigorous testing protocols, and continuously update and reinforce their models against emerging risks.

Black box AI, while powerful, presents notable challenges that demand our attention and vigilance. As the AI landscape continues to evolve, addressing issues of bias, transparency, flexibility, and security becomes paramount for the responsible development and deployment of AI solutions. By fostering transparency, enforcing accountability, and proactively addressing vulnerabilities, we can unlock the true potential of AI while upholding ethical standards and building a safer, more inclusive future.

When should black box AI be used? A Balancing Act of Accuracy and Efficiency

While black box machine learning models present certain challenges due to their lack of transparency, they also offer unique advantages that make them indispensable in specific scenarios. By closely examining these benefits, organisations can strategically incorporate black box AI models to achieve higher accuracy, rapid conclusions, minimal computing power requirements, and streamlined automation, particularly in computer vision and natural language processing (NLP) applications.

Higher Accuracy: Unravelling Complex Patterns Unseen by Humans Black box AI models excel in identifying intricate patterns in vast datasets, leading to higher prediction accuracy in comparison to more interpretable systems. This advantage proves particularly valuable in domains such as computer vision and NLP, where complex structures and subtle nuances require sophisticated algorithms to detect and comprehend. By harnessing the power of black box models, organisations can unlock unparalleled precision and make well-informed decisions that might elude human observers.

Rapid Conclusions: Swift Execution with Minimal Expertise The inherent nature of black box models, consisting of sets of rules and equations, facilitates quick execution and optimisation. For instance, employing least-squares fit to calculate the area under a curve offers a solution without necessitating an exhaustive understanding of the problem’s intricacies. This ability to arrive at rapid conclusions proves advantageous in time-sensitive scenarios, enabling efficient problem-solving without extensive expertise requirements.

Minimal Computing Power: Efficient Resource Utilisation Black box models demonstrate remarkable efficiency, demanding minimal computational resources to operate effectively. Their straightforward nature streamlines processing, reducing the burden on computational infrastructure. This characteristic proves beneficial in resource-constrained environments, where optimising computing power is crucial to maintain cost-effectiveness and scalability.

Automation: Streamlining Complex Decision-Making Black box AI models excel in automating complex decision-making processes, minimising the need for frequent human intervention. By efficiently processing and analysing vast volumes of data, these models enable organisations to streamline operations, save time, and allocate resources more efficiently. Embracing automation through black box AI fosters enhanced productivity and empowers teams to focus on higher-level tasks, driving innovation and growth.

While black box AI models present challenges in terms of transparency and interpretability, their advantages in terms of higher accuracy, rapid conclusions, minimal computing power requirements, and streamlined automation make them an indispensable asset in specific applications. By carefully evaluating the suitability of black box models in various contexts, organizations can strike a delicate balance between harnessing their power for superior performance and ensuring ethical and responsible AI deployment. With a strategic approach to black box AI integration, businesses can drive transformative outcomes, unlocking new levels of efficiency and precision in the dynamic world of artificial intelligence.

What is Responsible AI (RAI): Upholding Ethics and Social Responsibility

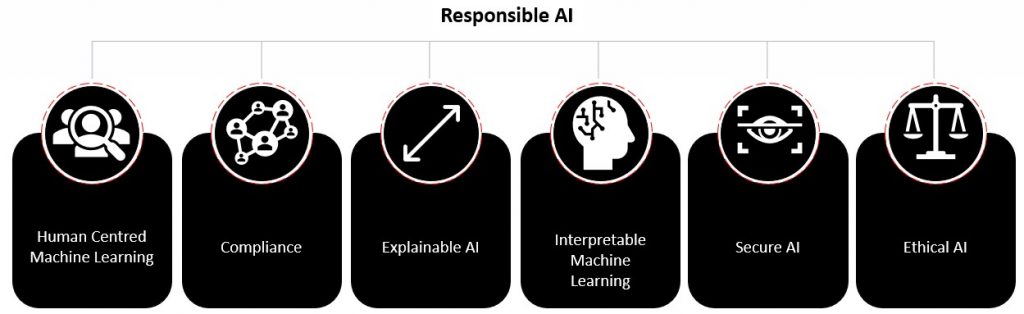

In the ever-evolving landscape of artificial intelligence, responsible AI (RAI) emerges as a beacon of ethical and social responsibility. Driven by legal accountability, RAI initiatives seek to reduce the negative financial, reputational, and ethical risks associated with black box AI and machine bias. By adhering to guiding principles and best practices, both consumers and producers of AI can foster fairness, transparency, accountability, ongoing development, and human supervision in AI systems.

Fairness: Empowering Equitable Treatment for All Responsible AI systems prioritise fairness, ensuring that all individuals and demographic groups are treated without bias or discrimination. By avoiding the reinforcement or exacerbation of preexisting biases, RAI strives to create a level playing field and foster inclusivity. Ethical AI development involves meticulous evaluation and mitigation of bias throughout the entire AI lifecycle.

Transparency: Illuminating the Inner Workings of AI A cornerstone of RAI is transparency, where AI systems are designed to be easily comprehensible and explainable to users and stakeholders. AI developers embrace openness by disclosing crucial details about data collection, storage, and usage during the training process. By shedding light on the decision-making process, responsible AI builds trust and empowers users to make informed decisions.

Accountability: Upholding Responsibility for AI Actions RAI emphasises accountability, holding organizations and individuals responsible for the judgments and actions taken by AI technology. Developers and users alike are obligated to ensure that AI systems adhere to ethical standards and comply with legal regulations. Proactive measures are taken to minimize unintended consequences, instilling confidence in the responsible use of AI.

Ongoing Development: Adapting to Moral AI Concepts and Societal Norms To ensure the continued alignment of RAI outputs with evolving moral AI concepts and societal norms, continual monitoring and refinement are essential. Responsible AI is a dynamic and iterative process that adapts to changing circumstances, making sure that the technology evolves responsibly in an ever-changing world.

Human Supervision: Striking a Balance with Human Intervention Every AI system designed under RAI must incorporate human supervision and intervention when appropriate. This principle recognises the value of human judgment, expertise, and empathy, ensuring that AI augments human decision-making rather than replacing it. Human involvement acts as a safeguard against potential errors and helps maintain ethical standards in AI applications.

Responsible AI (RAI) stands as a crucial paradigm in the development and utilisation of artificial intelligence. By adhering to guiding principles such as fairness, transparency, accountability, ongoing development, and human supervision, RAI fosters a culture of ethical and socially responsible AI implementation. As the AI landscape continues to advance, embracing responsible AI practices becomes instrumental in building trust, promoting inclusivity, and shaping a future where AI technology positively impacts society while safeguarding human values.

Black box AI vs. white box AI

In the realm of artificial intelligence, two contrasting approaches, Black Box AI and White Box AI, offer distinct advantages tailored to specific applications and goals. While Black Box AI remains opaque in its internal workings, achieving high accuracy and efficiency, White Box AI provides transparency and interpretability, catering to decision-making scenarios. Delving deeper, we uncover the defining features of each approach, shedding light on their respective strengths and best-fit applications.

Black Box AI: Harnessing Opacity for Accuracy and Efficiency In Black Box AI, the inputs and outputs of the system are known, but its internal mechanisms are inscrutable or challenging to understand fully. This approach is widely used in deep neural networks, where the model undergoes extensive training on large datasets, adjusting internal weights and parameters accordingly. The black box model excels in tasks like image and speech recognition, where rapid and precise data classification is paramount.

Distinct Features of Black Box AI: a. High Accuracy and Efficiency: Black box AI often achieves remarkable accuracy and efficiency in complex tasks due to its ability to identify intricate patterns within vast datasets. b. Non-linear Models: Models like boosting and random forest, which are prevalent in black box AI, are highly non-linear and can be challenging to explain. c. Limited Transparency: The opacity of black box AI impedes direct interpretation, making it challenging to understand the decision-making process.

White Box AI: Embracing Transparency for Interpretability White Box AI, in contrast, offers transparency and comprehensibility in its internal workings, allowing users to understand how conclusions are reached. This approach finds its niche in decision-making applications, such as medical diagnosis or financial analysis, where understanding the reasoning behind AI judgments is vital.

Distinct Features of White Box AI: White box AI’s transparent nature empowers data scientists to examine algorithms, comprehend their behaviour, and identify the variables influencing outcomes.

Debugging and Troubleshooting: Due to its interpretable design, white box AI is more straightforward to debug and troubleshoot when issues arise.

Linear and Decision Tree Models: White box AI often employs linear, decision tree, and regression tree models, which offer clear explanations for their decisions.

The Evolution of White Box AI: As AI continues to evolve, white box AI, especially neural networks, is garnering popularity due to its enhanced accountability and transparency. This trajectory is promising, as it aligns with societal demands for responsible AI deployment.

The distinction between Black Box AI and White Box AI underscores the importance of selecting the appropriate approach based on specific AI applications and objectives. While black box models excel in accuracy and efficiency for tasks like image and speech recognition, white box AI finds its strengths in transparent decision-making applications. As AI technology advances, the rising prominence of white box AI, coupled with its interpretability and accountability, will shape a future where AI solutions strike a harmonious balance between efficiency and ethical responsibility.